- AI Report by Explainx

- Posts

- Sora Beats ChatGPT in First-Week Downloads👑

Sora Beats ChatGPT in First-Week Downloads👑

Sora hitting 1M downloads in days to Google’s AI shoe try-on, TOUCAN’s massive agent dataset, and Anthropic’s new global access restrictions.

The AI world keeps breaking records and this week, it’s all about speed, scale, and smarter experiences.

🎬 Sora races past one million downloads in under five days, outpacing ChatGPT’s launch and sparking new debates around AI video generation, copyright, and digital ethics.

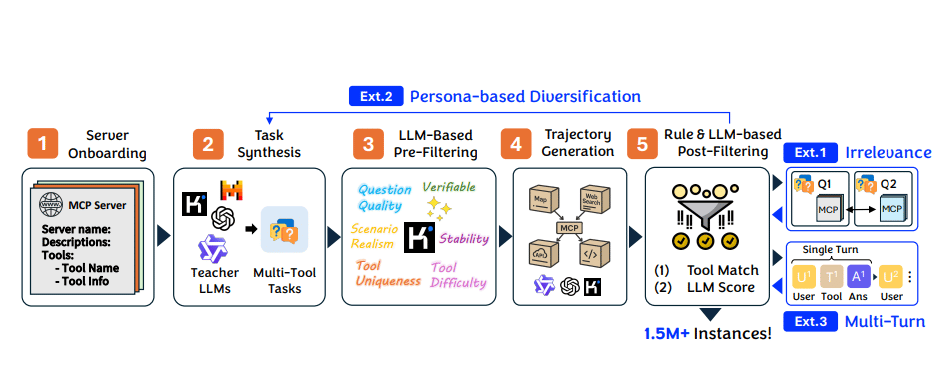

🧠 TOUCAN, the world’s largest open agent dataset, debuts with 1.5 million tool-use trajectories, redefining how LLMs learn real-world reasoning and multi-step task execution.

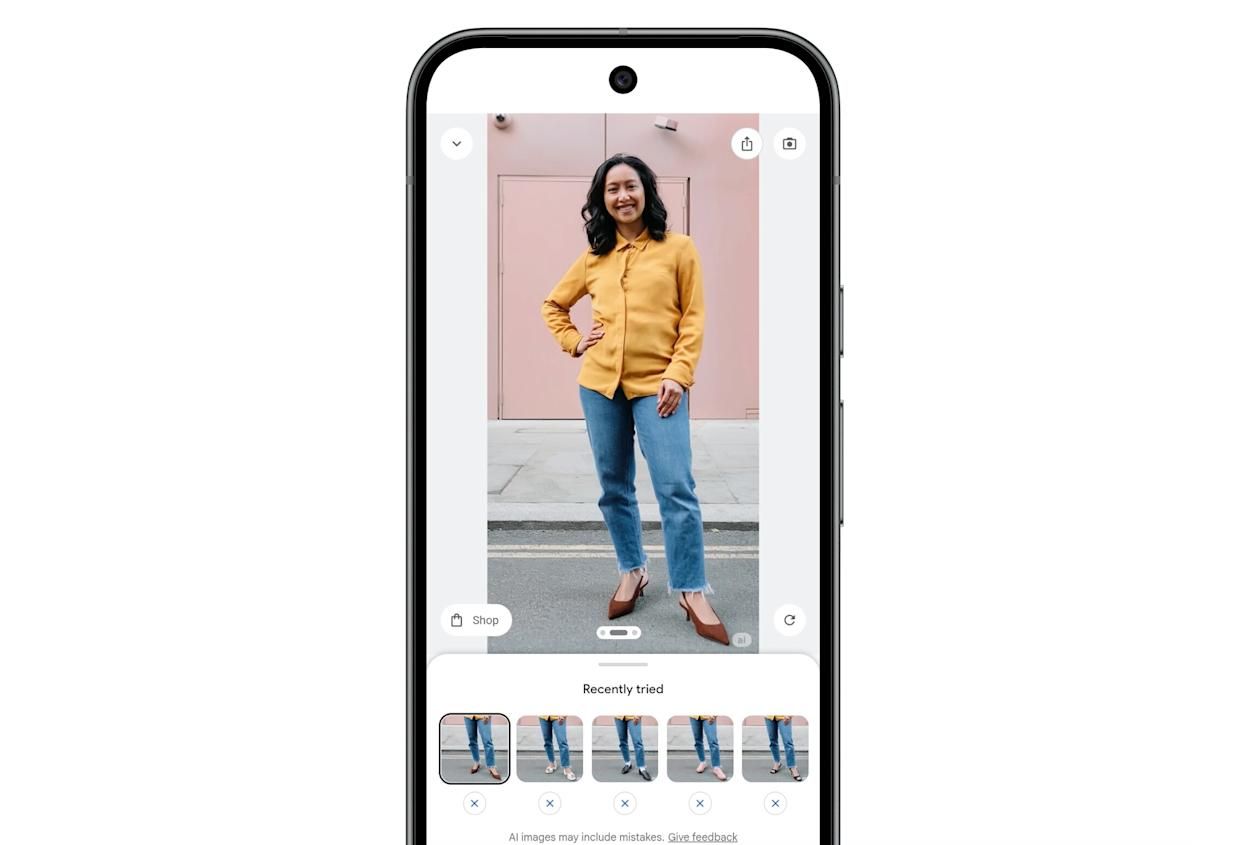

👟 Google’s Virtual Try-On expands to shoes, letting users preview sneakers and heels directly in Search — bringing AI-powered shopping closer to real-life fitting rooms.

🚫 Anthropic tightens its Terms of Service, blocking access from companies linked to restricted regions to ensure ethical, secure, and compliant AI use.

From record-breaking adoption to open data revolutions and ethical safeguards, this week’s updates show how fast and responsibly, the AI frontier is evolving.

Sora Hits 1M Downloads Faster Than ChatGPT

OpenAI's video-generating app Sora has achieved over one million downloads in under five days since its late September launch, surpassing the initial download speed of ChatGPT on iOS despite being invite-only and limited to the U.S. and Canada. Within its first week, Sora recorded about 627,000 iOS downloads, compared to ChatGPT's 606,000 downloads in its first week. The app quickly rose to No. 1 on the U.S. App Store by October 3, outpacing other major AI app launches like Anthropic's Claude and Microsoft's Copilot. Sora uses OpenAI’s Sora 2 video model to create realistic AI-generated videos, including deepfake content, and has ignited discussions due to its potential for copyright infringements and ethical concerns. OpenAI is actively working on new moderation features and giving rights holders more control over their content to address these challenges while continuing to support rapid user growth.

Largest Open Agent Dataset Released

TOUCAN is a massive open-source dataset of 1.5 million tool-agentic task trajectories synthesized from nearly 500 real-world Model Context Protocol (MCP) servers, covering over 2,000 tools across diverse domains. It addresses prior limitations in scale, diversity, and realism for LLM agent training, offering authentic, multi-step, multi-turn tool usage and rigorous quality filtering. The dataset was created via a robust pipeline using multiple LLMs for task synthesis and trajectory generation, with extensions for edge cases, diverse personas, and multi-turn dialogs. Models fine-tuned on TOUCAN outperform larger closed-source counterparts on key agentic benchmarks like BFCL V3 and MCP-Universe, demonstrating significant gains in complex tool use, reasoning, and benchmark leaderboards, while offering a fully reproducible, permissively-licensed resource for advancing LLM agent research.

Google’s Virtual Try-On: Shop Smarter, Look Better

Google is expanding its AI-powered virtual try-on feature to include shoes, allowing shoppers to preview sneakers, heels, and boots directly in Google Search using a full-length photo. The technology uses advanced AI perception models to realistically map footwear onto a person’s image, capturing details like shape, depth, texture, and fit, for a near-authentic preview before purchase. This new feature launches for select users in the US and will roll out soon in Australia, Canada, and Japan, making online shopping more interactive and convenient by letting users easily compare different styles and share their looks with friends, all without needing to visit a physical store.

Anthropic Clamps Down on AI Access in Risky Regions

Anthropic has updated its Terms of Service to strengthen restrictions on the use of its AI services by companies and organizations linked to unsupported regions, such as China, due to legal, regulatory, and national security concerns. The new policy prohibits entities that are more than 50% owned, directly or indirectly, by firms headquartered in these restricted jurisdictions from accessing Anthropic’s products, regardless of where those entities operate. This step aims to close loopholes where companies circumvent restrictions by using subsidiaries in other countries. Anthropic stresses this move is to prevent misuse of its AI technologies for adversarial military, intelligence, or authoritarian purposes and to support U.S. and allied strategic and democratic interests. The company also advocates for strong export controls and security-focused AI development policies. This is a pioneering move among major U.S. AI firms, reflecting growing geopolitical tensions and the strategic significance of AI capabilities.

Hand Picked Video

In this video, we dive deep into the recent SORA developments and what it reveals about AI companies' relationships with artists.

Top AI Products from this week

Attrove AI - Attrove is an AI-driven platform that seamlessly synthesizes information & communication, ensuring key insights and expertise are preserved and accessible so you can fuel productivity and continuity across your organization.

RedPill - Redpill delivers AI privacy by design. All workloads execute in secure hardware enclaves — every LLM query comes with a cryptographic proof so you never have to trust us blindly. Integrate easily via our simple SDK / API.

Intryc - Intryc scores and gives feedback on real support conversations. Now with AI simulations, agents can role play real past tickets and get scored instantly, cutting onboarding time by 50 percent and saving CX leads 12 to 15 hours of manual training each week.

Layercode CLI - Build a voice AI agent in minutes, straight from your terminal. One command scaffolds your project with built-in tunneling, sample backends, and global edge deployment. Connect via webhook, use existing agent logic, pay only for speech (silence is free).

Collect 2.0 - Collect has already helped hundreds of B2B SaaS companies improve their customer onboarding. With our new AI features, we’re taking it a step further by making onboarding faster, smarter, and effortless at scale.

Androidify - The classic Androidify app is back, now powered by Gemini. Turn your selfie or a simple prompt into a unique Android bot avatar. Use AI to generate custom backgrounds with image editing, create shareable stickers, and bring your bot to life.

This week in AI

AI Browser Use Challenges - AI browser agents face hurdles like dynamic web content, data privacy, and scaling automation. Amazon’s Nova Act tool aims to enable smarter AI agents tackling these issues efficiently.

Tiny Recursive Model - Tiny Recursive Model (TRM) uses a small 2-layer network with recursion, surpassing larger models in reasoning tasks like Sudoku and ARC-AGI, while needing fewer parameters and training steps.

Secure AI Credentials - 1Password’s Secure Agentic Autofill securely injects credentials into browsers for AI agents only with human approval, keeping passwords hidden and reducing credential risk in AI workflows.

Figma & Google Cloud AI - Figma partners with Google Cloud to integrate Gemini AI models, boosting image generation speed by 50%, enhancing creative workflows for 13M users with faster design and editing tools.

Figure 03 Robot - Figure 03, a scaled-up humanoid robot for home and industry, features improved tactile hands, wireless charging, advanced sensors, and is built for mass production with AI-powered Helix.

Paper of The Day

Large multimodal models can be fine-tuned to learn new specialized skills by selectively updating specific components of the model, mainly the language model part. Techniques such as tuning the self-attention projection layers or selectively tuning parts of the feed-forward network (MLP) help the model learn new tasks effectively while minimizing the loss of previously acquired capabilities. This approach mitigates catastrophic forgetting, which is often linked to shifts in the model's output distribution. Fine-tuning only parts of the model preserves stability and general performance better than full fine-tuning, enabling large multimodal models to continually improve without repeated full retraining. These strategies also outperform commonly used forgetting mitigation methods by balancing learning and retention with simpler adaptation steps.

To read the whole paper 👉️ here.