- AI Report by Explainx

- Posts

- Say goodbye to context switching

Say goodbye to context switching

Google’s Gemini CLI Extensions, Tencent’s Hunyuan-Vision-1.5, and Duke’s AI-designed nanoparticles are driving smarter AI workflows, advanced vision, and next-gen drug delivery.

The AI world keeps pushing boundaries — and this week, it’s all about customization, intelligence, and real-world impact.

🧩 Google’s Gemini CLI Extensions let developers personalize the AI command line with custom tools, commands, and smarter workflows — bringing true flexibility and integration to AI-assisted development.

👁️ Tencent’s Hunyuan-Vision-1.5 redefines multimodal intelligence with “thinking-on-image” capability, outperforming GPT-4V in visual reasoning and setting new global benchmarks for multilingual and vision tasks.

💊 Duke University’s TuNa-AI platform merges machine learning with lab automation to design next-gen nanoparticles for drug delivery — making cancer treatments safer, faster, and more effective.

From coding smarter to seeing deeper and healing faster, these breakthroughs prove one thing: AI’s future isn’t just intelligent — it’s connected, creative, and life-changing.

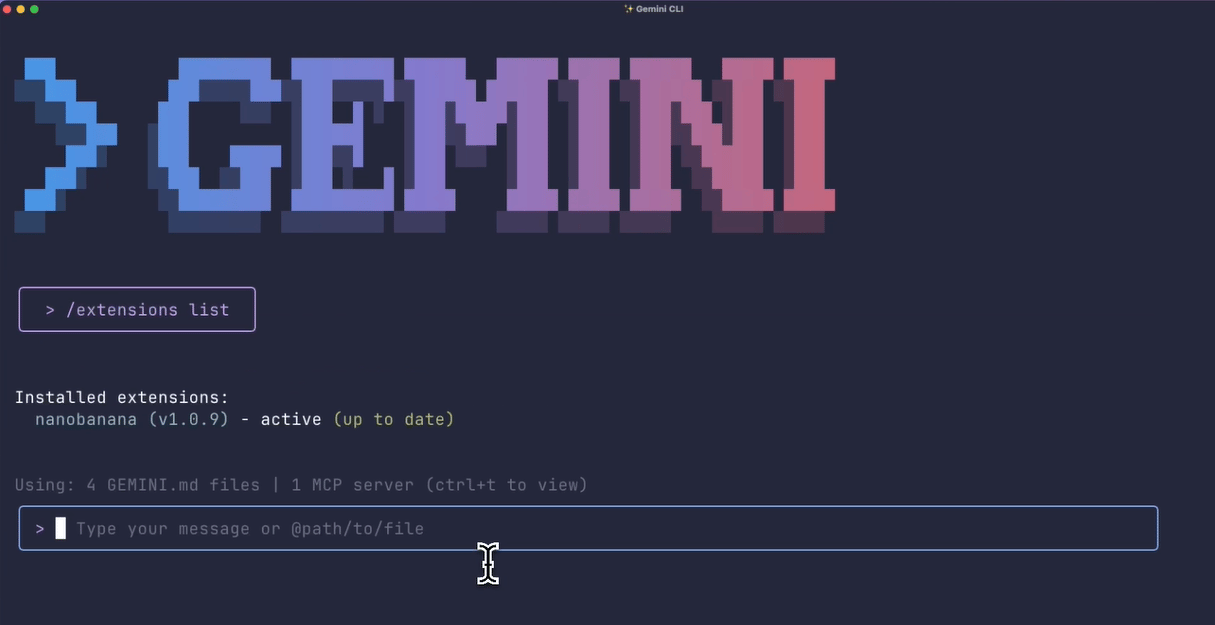

Gemini CLI Extensions: Customize and Connect

Gemini CLI extensions enable users to customize and extend the Gemini command-line interface by adding new tools and commands. With a simple setup using Node.js and TypeScript, developers can create extensions packaged with manifest files, MCP servers for tool integration, and custom commands for streamlined workflows. Extensions also support persistent context through GEMINI.md files, making AI interactions smarter and more personalized. These extensions can be easily installed and shared via Git repositories, allowing the Gemini CLI to connect directly with popular tools and services, enhancing developer productivity and enabling seamless AI-assisted workflows.

Tencent’s Hunyuan-Vision-1.5: Smarter Vision AI Unveiled

Tencent has introduced Hunyuan-Vision-1.5, a cutting-edge vision-language model built with a mamba-transformer hybrid architecture that delivers advanced multilingual and multimodal understanding, reasoning, and “thinking-on-image” capabilities. Ranked third on LMArena and recognized as China’s top-performing model, it excels in image, video, OCR, diagram, and 3D spatial tasks while supporting multiple languages. Tencent plans to open-source the model, technical report, and checkpoints (A56B, 4B) by late October 2025, along with inference tools and “thinking on images” support. The architecture features specialized neural networks that allow highly efficient computation, outperforming rivals like GPT-4V on key benchmarks and supporting enterprise deployment via Tencent Cloud. It is pre-trained on over 1.6 billion image-text pairs, optimized for high-resolution and text-heavy image tasks, and has set new state-of-the-art scores for multimodal reasoning in several global benchmarks.

Smarter Drug Delivery with AI Nanoparticles

Duke researchers have developed an AI-powered platform combining machine learning with automated wet lab techniques to design optimized nanoparticles for drug delivery, significantly improving cancer drug formulations. This approach enabled the creation of a new nanoparticle formulation for the leukemia drug venetoclax that dissolved better and more effectively inhibited leukemia cell growth. The AI also helped re-engineer the trametinib cancer therapy, reducing a potentially toxic component by 75% while enhancing drug distribution in lab mice. This platform, called TuNa-AI, increases successful nanoparticle formation by over 40% compared to standard methods and promises safer, more effective therapeutic delivery for a range of diseases. The technology marks a major advancement in designing and optimizing nanoparticle-based drug delivery systems with potential broad biomedical impact.

Hand Picked Video

In this video, we'll look at China just dropped QwQ-32B, and it’s making waves in the AI world! This model is being called the smallest yet most powerful reasoning model out there. But what makes QwQ-32B so special? How does it compare to Deepseek r1 and other AI giants?

Top AI Products from this week

Glue - Glue is the first platform for agentic team chat, where humans, their tools, and AI collaborate side by side. With native AI, goal-oriented threads, and MCP-powered workflows, teams can cut noise and turn conversations into outcomes.

ElevenLabs UI - ElevenLabs UI is an open-source component library built on shadcn/ui to help you build AI audio and voice agent experiences faster. It provides pre-built, customizable components for voice chat, transcription, and more, all under an MIT license.

Tasklet - Tasklet makes it easy to automate any business process with AI — just describe what you want in plain English. Tasklet connects with any API or MCP server and can control a computer in the cloud. If your business uses it, it can automate it.

Edge Hound - Agentic AI-Driven Trading Intelligence. Thousands of stocks. Other assets en route. Save hours of research time and make smarter decisions. Tap into AI-powered sentiment tracking across financial news, social media, and forums to outperform everyone.

Magic Studio - Create beautiful, fun art from text, in seconds. Describe what you want to see, pick a style and customize as you like. Try different variations, till you like one. Ideal for moodboards, concepts, covers, posters, socials. Free to use, no signup needed.

Yousteady 0.1v - Learning shouldn’t feel chaotic. In a world of endless information, Steady makes learning simple, structured, and stress-free.

This week in AI

Facebook Reels Update - Facebook’s new Reels update boosts personalization with a smarter recommendations engine showing 50% more fresh videos. Friend bubbles reveal friends’ likes and enable private chats. AI-powered suggested searches help discover related content effortlessly.

Google Opal Expansion - Google’s no-code AI app builder Opal expands to 15 new countries with improved debugging, faster app creation, and parallel workflow execution for building AI mini-apps easily. Users create apps by describing workflows in plain language, with options to visually edit and share them seamlessly

Anthropic’s India Expansion - Anthropic will open its first India office in Bengaluru in early 2026 to access local AI talent and support sectors like education, healthcare, and agriculture with AI solutions while expanding Claude's Indic language support.

Grok Imagine Update - Elon Musk's Grok Imagine AI is now free, supports audio with custom speech, faster 24 FPS video, but lacks safeguards, enabling easy celebrity deepfakes and explicit content.

LlamaFarm AI Platform - LlamaFarm is an open-source framework for building retrieval-augmented AI apps locally or remotely, with a user-friendly CLI, YAML-configured pipelines, and full extensibility.

xAI Grok Tools Update - xAI’s Grok tools soon let users fetch data from Gmail, Slack, Notion, and X, synthesize multi-source info, and get stock updates, enhancing AI-powered workflows.

Paper of The Day

This paper studies how large language model (LLM) agents can better handle long-horizon tasks requiring many planning steps. It compares two approaches: conventional planning with explicit executable action lists (PwA), and planning with abstract action schemas (PwS) that instantiate templates into specific actions. The authors show that PwA works well for small to medium action spaces but struggles when action spaces grow large and combinatorial, creating cognitive overload. PwS scales better in large, complex environments by reducing decision complexity through abstraction. They introduce a "Cognitive Bandwidth Perspective" to explain this trade-off and identify an inflection point where PwS becomes superior. The paper also discusses how model capabilities affect this point and recommends targeted training to improve schema instantiation, making PwS a promising paradigm for scalable autonomous agents.

To read the whole paper 👉️ here.