- AI Report by Explainx

- Posts

- OpenAI’s Safety Dashboard

OpenAI’s Safety Dashboard

OpenAI boosts safety, Psyche decentralizes training, and Anthropic's new Claude models combine reasoning with tools—three big leaps shaping the future of AI.

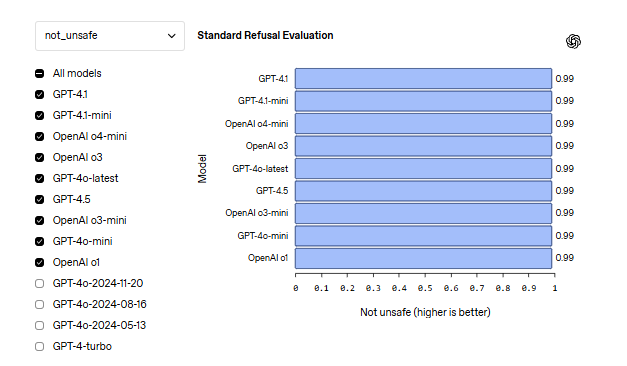

OpenAI has launched a new AI safety evaluation platform to better understand and communicate how its models handle risks. The platform measures things like harmful content detection, jailbreak resistance, hallucination rates, and instruction-following behavior—offering a transparent look into how safely and accurately models perform across scenarios.

Psyche is reshaping how large AI models are trained with its decentralized infrastructure. Instead of centralized data centers, Psyche taps into underused hardware worldwide using peer-to-peer networking and blockchain coordination. Its first major project, Consilience, aims to train a 40B-parameter model on 20 trillion tokens—an ambitious step for democratizing AI.

Anthropic is preparing to release new versions of its Claude Sonnet and Opus models that combine internal reasoning with external tool use. These models can switch between thinking and acting, test their own code, and self-correct when needed—bringing us closer to AI that can reason and build autonomously.

Let’s explore more about these exciting updates.

OpenAI’s New AI Safety Evaluation Platform

OpenAI conducts ongoing safety evaluations of its AI models to measure and publicly share their performance and safety across several key areas. These include harmful content detection, where models are tested to ensure they do not produce disallowed outputs such as hateful or illicit advice; jailbreak resistance, which evaluates how well models withstand adversarial prompts designed to bypass safety filters; hallucination rates, measuring the frequency of factual errors in responses; and adherence to an instruction hierarchy that prioritizes system messages over developer and user messages. Evaluations use both standard and challenging test sets, employing automated scoring tools to assess outputs for safety and compliance. Results show high refusal rates for unsafe content and strong resistance to jailbreak attempts, with ongoing improvements reflected in newer model versions. Accuracy on factual question answering varies, with models performing better on some datasets than others. These evaluations are part of OpenAI’s commitment to transparency and continuous improvement in AI safety, complementing detailed system cards and research disclosures to provide a comprehensive view of model capabilities and risks. This approach helps OpenAI adapt evaluation methods as AI technology evolves and new risks emerge.

Decentralized AI Training Platform - Psyche

Psyche is an open, decentralized AI infrastructure designed to democratize the training of large language models by utilizing underused hardware worldwide instead of relying on centralized data centers. It employs a system called DisTrO that compresses and shares only the most important data during training, significantly reducing data transfer needs. Managed through the Solana blockchain for fault tolerance and censorship resistance, Psyche connects nodes using advanced peer-to-peer networking techniques like UDP hole-punching and the Iroh protocol, enabling devices behind firewalls to participate. The platform includes a blockchain-based coordinator, client nodes for training and verification, and flexible data providers, all designed for robustness with features like bloom filters and dynamic fault tolerance. Its first major project, Consilience, aims to train a 40-billion parameter model on 20 trillion tokens, marking a key step in decentralized AI training.

Anthropic Prepares to Release Next-Gen Sonnet and Opus AI

Anthropic is set to release new versions of its AI models, Claude Sonnet and Claude Opus, in the coming weeks that introduce advanced capabilities allowing them to alternate between internal reasoning and the use of external tools, applications, and databases to find answers more effectively. According to individuals who have tested these models, if the AI encounters difficulties or gets stuck while using a tool to solve a problem, it can switch back to a "reasoning" mode to analyze the issue, understand what might be going wrong, and self-correct its approach. This back-and-forth process enhances the models' problem-solving abilities by combining both external resource utilization and internal reflection. Additionally, when generating code, these models automatically run tests on the code they produce; if errors are detected during testing, they pause to think through the mistake, identify potential causes, and make necessary corrections. This iterative process helps improve the accuracy and reliability of code generation, making the models more effective for programming tasks. Overall, these new versions represent a significant step forward in creating AI systems that can dynamically integrate reasoning with tool use to solve complex problems more autonomously and efficiently.

Hand Picked Video

In this video, we'll look at My AI Journey, Inspiration and future of with AI Agents, AGI and more.

Top AI Products from this week

Inkr 2.0 - Inkr turns audio into accurate, structured content in seconds. With real-time transcription, AI-enhanced notes, smart templates, and searchable transcripts, it’s more than a transcriber—it’s your productivity co-pilot. Try it, no account needed.

Tensorlake - Tensorlake Cloud is a platform for document ingestion and data orchestration. Parse real-world documents with human-like layout understanding and build Python-based workflows at scale and ready for production.

Tersa - Tersa is an open source canvas for building AI workflows. Drag, drop connect and run nodes to build your own workflows powered by various industry-leading AI models.

Quick Mock 2.0 - QuickMock turns LinkedIn jobs into AI-powered mock interviews—instantly. Now featuring Hot Interviews and a real Question Bank from top companies. Practice trending roles, get insider questions, and receive instant feedback to level up fast.

queryinside - **Query Inside** revolutionises how any web developer or analyst handles data. Whether you're searching through thousands of data points, monitoring user activity in real-time, or analysing detailed event logs, Query Inside is your ultimate tool.

Copy as Markdown for AI - Convert web pages to LLM-optimized Markdown + YAML format in one click. No complicated settings are required. Just right-click to convert the entire page ! Preview function allows you to see the conversion results instantly

This week in AI

New Bug Bounty Launched - We're inviting experts to find universal jailbreaks in Claude 3.7 Sonnet's safety system, with rewards up to $25K. Apply by May 18!

Alibaba’s Wan2.1 VACE Released - Wan2.1 VACE is a new all-in-one open-source video creation/editing model with top performance, consumer GPU support, and multi-language text generation.

Meta FAIR Unveils AI Breakthroughs - Meta releases OMol25 dataset, UMA atomic model, new generative modeling tools, and brain-language research to boost molecular science and neuroscience progress.

Google Leads AI Patent Race - Google leads U.S. and global generative and agentic AI patent filings, surpassing IBM; U.S. generative AI patents surged 56% last year amid rising China competition.

Stable Audio Open Small Released - Stable Audio Open Small, a 341M parameter text-to-audio model by Stability AI and Arm, runs fast on Arm CPUs, generating 11s audio in under 8s, free for commercial use.