- AI Report by Explainx

- Posts

- Microsoft’s Launch Cancer-Mapping AI🔬🧬

Microsoft’s Launch Cancer-Mapping AI🔬🧬

Microsoft’s new tool maps tumors, Z.ai releases a powerful long-context AI model, and OpenAI prepares clearer, sharper Image-2 models.

AI is breaking new ground again, from mapping tumors like star charts to pushing multimodal limits and sharpening the next generation of image models. Here’s what just dropped:

🧬 Microsoft’s GigaTIME – Tumors Mapped Like Digital Universes

Microsoft’s new open-source AI turns routine $5–10 pathology slides into high-resolution tumor microenvironment maps, simulating protein behavior instantly and analyzing data from 14,000+ patients. A leap toward precision oncology at population scale.

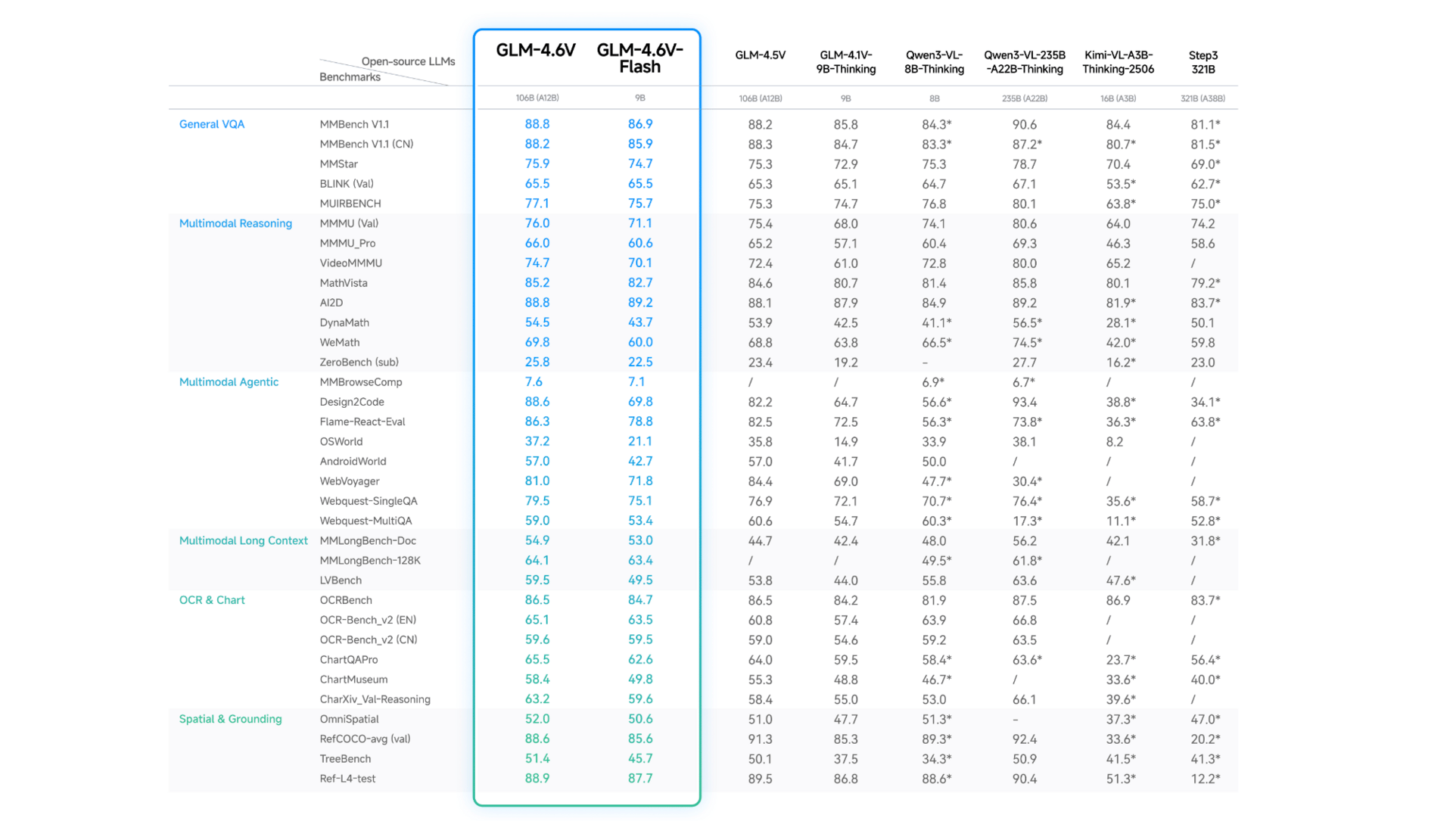

🖼️ GLM-4.6V – Z.ai’s Multimodal Beast with 128K Context

A fully open-source 106B/9B vision-language model pair that handles ~150 pages, 200 slides, or 1-hour videos in one go. SOTA on visual reasoning, native multimodal function calling, and agentic workflows for search, code, and design-to-code.

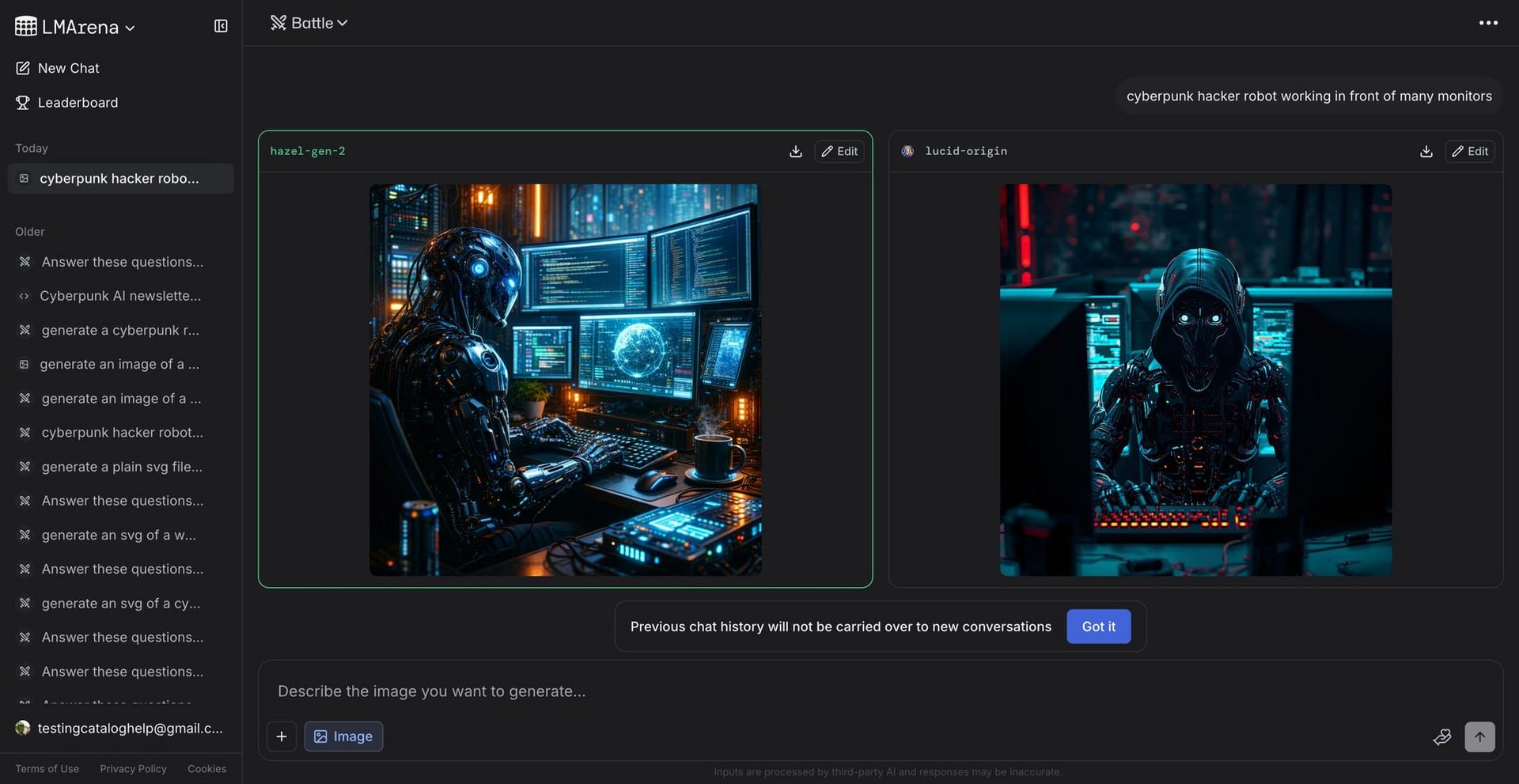

🎨 OpenAI Image-2 – Sharper, Cleaner, More Accurate

Image-2 and Image-2-mini (“Chestnut” & “Huzzlenut”) surface in evals with crisper detail, fixed color issues, and a DALL-E-style creative boost. Built for ChatGPT integration and pro-grade content creation as OpenAI gears up for GPT-5.2 alignment.

AI isn’t slowing down, it’s upgrading every field at once.

Microsoft’s tumor-mapping AI

Microsoft's GigaTIME, an open-source AI tool from Microsoft Research in collaboration with Providence and the University of Washington, revolutionizes cancer research by analyzing tumor microenvironments at unprecedented scale. Every minute, four Americans face a cancer diagnosis, navigating medicine's most complex disease; GigaTIME transforms routine $5-10 pathology slides into detailed digital maps revealing immune cell-tumor interactions via protein activation, uncovering hidden patterns in seconds versus days-long, costly lab tests. Processing data from over 14,000 patients across 51 hospitals and 1,000+ clinics—spanning 40 million cells, H&E, and mIF with 21 proteins—it simulates dozens of proteins instantly, enabling tens of thousands of scenarios to predict cancer behavior, match personalized treatments, counter resistance, and improve outcomes. Featured in a Cell paper, it's publicly available on Microsoft Foundry Labs and Hugging Face, pioneering population-scale spatial proteomics for "virtual patients" forecasting progression. Providence Genomics CMO Carlo Bifulco hails it as a precision oncology leap, potentially unlocking better therapies for non-responders. (Exact 1000 characters)

GLM-4.6V: Z.ai’s Open-Source Multimodal AI with Extended Context

Z.ai has open-sourced the GLM-4.6V series, featuring GLM-4.6V (106B parameters) for cloud/high-performance tasks and GLM-4.6V-Flash (9B) for local/low-latency applications, both with a 128K token context window—handling ~150 pages of docs, 200 slides, or 1-hour videos. These models set new state-of-the-art benchmarks in visual understanding and reasoning on MMBench, MathVista, and OCRBench among open models of similar scale. Pioneering native multimodal function calling, they bridge perception to action, powering agents for rich-text generation, visual web search, frontend development ("design-to-code"), and end-to-end search-analysis workflows. By processing images, videos, and documents directly via aligned visual encoders—avoiding text conversion losses—they leverage billion-scale knowledge data, agentic synthesis, MCP extensions, and RL with visual feedback for superior performance. Trained through continual pre-training on long-context image-text pairs, world knowledge enhancement, and self-improving agents, GLM-4.6V is accessible via Z.ai platform, Zhipu Qingyan App, OpenAI-compatible API, and weights on HuggingFace/ModelScope (with vLLM/SGLang support). Detailed in arXiv:2507.01006.

OpenAI’s Image-2 Models Are Coming

OpenAI prepares Image-2 and Image-2-mini, successors to Image-1, spotted as "Chestnut" and "Huzzlenut" on LM Arena and Design Arena. These models promise sharper detail, better fidelity, and fixed issues like Image-1's yellow tint, rivaling Google's Nano Banana 2 in rendering and color accuracy. Early tests show improved structure and a DALL-E-like style blending past strengths with advanced capabilities. Positioned for ChatGPT integration, they support creative pros with high-quality assets for design, marketing, and prototyping. Side-by-side evals close the gap on competitors, emphasizing versatility. Rollout eyes GPT-5.2 coordination; code prep signals soon. OpenAI's push matches Google's multimodal surge, boosting AI content creation.

Hand Picked Video

Unlock the future of mobile AI - learn how to run powerful open-source Language Models right on your Android phone! No cloud services, no subscriptions, just pure local AI power in your pocket.

Top AI Products from this week

Finesse by Skippr AI - AI agent that catches design, copy, and accessibility issues before you ship. Works on Localhost, Production, Figma, Lovable, Replit & more. Syncs with coding agents via MCP. Finesse reviews every screen against UX and product best practices, then gives you real-time feedback.

Helploom - Helploom is the most affordable customer support software. It offers unlimited customer support with beautiful Live Chat and Help Center. There's also an AI agent. Unlike others, Helploom offers unlimited messages, users, threads and team members.

Dex - Browser extension that proactively organizes tabs, shortcuts workflows, and handles tasks for you. Your digital mind in Chrome. Turn your browser into an AI command center.

ClickUp 4.0 - Today marks a new era of ClickUp, defined by craft, quality, and convergence. It’s the future of software, converging 50+ apps to maximize human productivity with AI. ClickUp 4.0 is polished, powerful, and perfectly crafted... and it's available for everyone, now.

Guess Whale - Guess Whale flips generative AI on its head: you don’t create the prompt, you guess it! Each round challenges players to reverse-engineer an AI-generated image. Play unlimited free rounds across three difficulty levels, earn stars to unlock collectible whale icons, and compete in a daily Wordle-style challenge.

The Almanac - Almanac lets you type any name and instantly see a professional, public-facing profile for that person. Search yourself or anyone else, if the profile does not exist yet, you can generate it in <10s. We crawl the public web, reconcile sources, and writes a detailed, neutral biography with a profile card, timeline, and key highlights.

This week in AI

OpenAI Certifications Launch - OpenAI debuts AI Foundations (in-ChatGPT job skills) and ChatGPT for Teachers courses, piloting with Walmart, universities. Free badges for 10M Americans by 2030 via OpenAI Academy.

OpenAI Hires Slack CEO as CRO - OpenAI appoints Denise Dresser, ex-Slack CEO and Salesforce vet, as Chief Revenue Officer to scale enterprise AI adoption via global sales and customer success.

DiffSynth Studio Single-Image LoRA Generator - ModelScope drops Qwen-Image-i2L suite—first open-source tools turning one image into custom LoRAs: Style (2.4B aesthetic), Coarse (7.9B content+style), Fine (7.6B 1024x1024 detail), Bias (30M alignment). Built on SigLIP2+DINOv3+Qwen-VL. Code on GitHub.

Qwen Code v0.2.2-v0.3.0 Update - Qwen Code adds Stream JSON for structured I/O/streaming, full i18n (EN/CN + custom langs), and major security/stability fixes: memory limits, Windows encoding, auth refactor, ModelScope support. Global lang packs welcome!

OpenAI’s 2025 Enterprise AI Report - OpenAI’s first enterprise AI report finds 75% of workers say AI improves speed or quality of their work, with complex “reasoning token” use surging and a 6x productivity gap emerging between AI power users and typical employees across companies.

Paper of The Day

New method adapts attention/in-context learning to tabular data, fitting personalized lasso models per test point via random forest proximity weights. Blends global baseline with local fits for interpretability + performance; beats lasso on UCI datasets, rivals XGBoost. Reveals data heterogeneity via clustered coefficients.

To read the whole paper 👉️ here