- AI Report by Explainx

- Posts

- Google to Bring AI Computing to Space

Google to Bring AI Computing to Space

AI expands its horizons as Google powers satellites with solar AI, OpenAI champions cultural inclusivity, Maya Research opens emotional voice synthesis to all

The AI frontier continues to expand into uncharted domains, from harnessing the Sun’s energy for orbital AI computing to building culturally grounded language benchmarks and democratizing emotional voice synthesis.

🌞 Google’s Suncatcher – Google plans to launch solar-powered satellites equipped with TPUs to run AI in orbit. By using direct solar energy and optical links, these space-based data centers aim to deliver sustainable, high-performance AI computing starting with prototypes in 2027.

🪷 OpenAI’s IndQA Benchmark – OpenAI introduces IndQA to evaluate how AI understands Indian languages and culture. Covering 2,278 expert-authored questions across 12 languages, it promotes fair and inclusive AI testing for India’s diverse population.

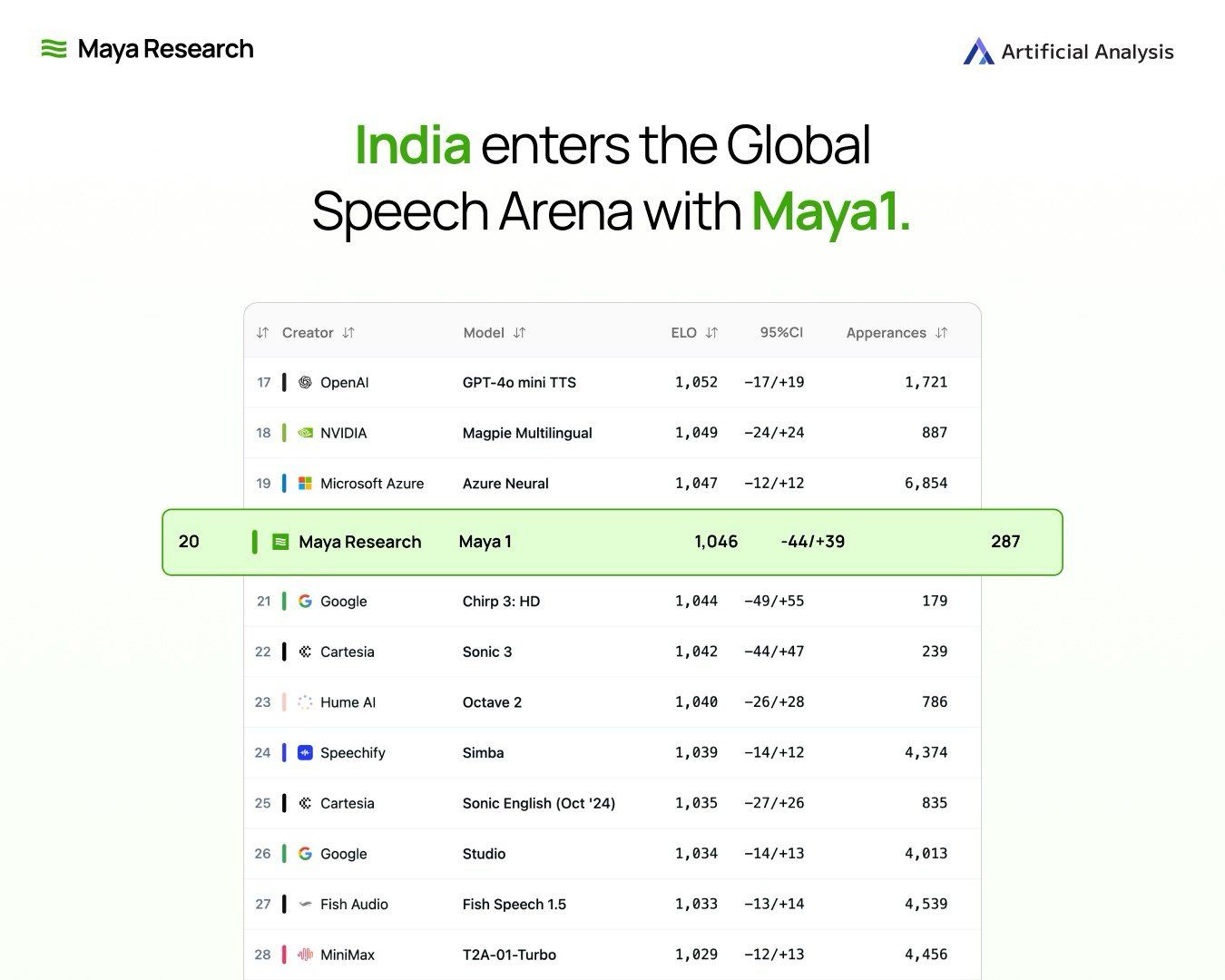

🎙️ Maya1 Emotional Voice AI – Maya Research releases Maya1, an open-source model for real-time expressive voice synthesis with 20+ emotions. Running on a single GPU under Apache 2.0, it offers a free, customizable alternative to proprietary voice tools.

From solar-powered space computing to local-language reasoning and open emotional AI, these breakthroughs mark a pivotal moment in making intelligence more planetary, personal, and participatory.

Google’s Suncatcher Aims to Bring AI Computing to Space

Google has launched Project Suncatcher, a pioneering research initiative aimed at designing a scalable AI infrastructure system based in space. This ambitious project envisions deploying a constellation of solar-powered satellites equipped with Google’s Tensor Processing Units (TPUs) and interconnected via high-bandwidth free-space optical links to harness the sun's vast energy potential for AI computing. By situating data centers in a sun-synchronous low Earth orbit, the system could achieve significantly higher and more continuous solar power generation than Earth-based facilities, minimizing terrestrial resource impact and cooling needs. Early prototypes are planned for launch in 2027 to test distributed machine learning tasks and the resilience of TPUs against space radiation.

OpenAI Launches IndQA for Indian Language AI Evaluation

OpenAI has introduced IndQA, a new benchmark specifically designed to assess the ability of AI systems to understand and reason about questions rooted in Indian culture and languages, covering 2,278 questions across 12 languages and 10 cultural domains such as history, literature, and everyday life. Developed with the input of 261 Indian domain experts, IndQA addresses the limitations of existing multilingual benchmarks, which often fail to capture nuanced, culturally specific knowledge, by rigorously evaluating AI models through expert-authored, reasoning-focused prompts and rubric-based grading. This initiative marks a significant step toward more equitable AI evaluation, aiming to support linguistic and cultural diversity at scale and to improve AI accessibility and relevance for India’s vast non-English-speaking population.

Maya1: Open-Source Voice AI with Real Emotion

Maya Research has launched Maya1, an open-source, emotionally intelligent voice AI model on Hugging Face that leverages a 3 billion parameter Llama-style transformer optimized for expressive, real-time voice generation with 20+ emotions and natural language voice design. This production-ready model, running on a single GPU with streaming audio via the SNAC neural codec, enables rich, customizable voices for applications from game characters to podcast narration, all available with an Apache 2.0 license allowing free commercial use and customization. Maya1 stands out by allowing users to design voice attributes through natural language prompts and embed emotion tags directly in text, offering an open alternative to proprietary solutions like ElevenLabs, without per-use fees and with full deployment control. This release marks a significant advancement in democratizing voice AI, making expressive, dynamic voice synthesis accessible globally beyond traditional English-centric tools.

Hand Picked Video

In this video, we'll look at creating a Chrome Extension from scratch using Vibe Code.

Top AI Products from this week

MeDo - MeDo is an AI-powered no-code app builder that turns your ideas into fully functional web apps. Just describe what you want to build, and MeDo automatically generates the front-end, back-end, and database - all in one place.

Extra Thursday - Extra Thursday treats your inbox like a dynamic workspace, summarizing threads, tracking action items, and drafting replies so you can focus on meaningful work, not inbox overload.

Context Link - Context Link is an AI-powered tool that lets you connect your existing content—like Google Docs, Notion pages, or websites—and turn it into searchable context for AI tools. Just link your sources, and Context Link makes them instantly queryable by meaning, not just keywords.

Snyk - Powered by DeepCode AI, Snyk automatically prioritizes and suggests fixes for the most critical risks. Whether you’re an individual developer or part of a large enterprise, Snyk makes it easy to build fast and stay secure from the start.

Arcitext - Arcitext is an AI-powered writing assistant designed to help you craft polished text faster and smarter. You input your ideas - a prompt, bullet list, or rough paragraph, and Arcitext transforms them into well-structured content tailored for your purpose (blog, email, report, etc.). It offers tone adjustments, formatting options, and saves you the repetitive effort of drafting from scratch.

This week in AI

Google Maps Adds AI Live Lane Guidance - AI-powered live lane guidance in Google Maps now helps Polestar 4 drivers navigate lanes in real time using the car’s camera, reducing missed exits. The feature will expand to more cars and roads.

AI Chatbots Struggle to Distinguish Fact from Belief – A new Stanford University study reveals that major AI chatbots have difficulty consistently differentiating between beliefs and facts.

Schaeffler Partners with Neura on Humanoid Robots – Schaeffler teams up with Neura Robotics to develop and supply components for humanoid robots, planning to deploy thousands by 2035 and advancing AI ecosystem collaboration.

Perplexity AI Adds Meeting Automation – Perplexity is expanding its assistant capabilities to include meeting automation tools soon. The upcoming features will allow the assistant to automatically join meetings, record sessions, and send recaps to specified recipients, reducing manual note-taking.

AWS Launches FastNet Subsea Cable - AWS has deployed the FastNet subsea cable connecting the U.S., UK, and mainland Europe to enhance cloud network speed and reliability. The cable will support growing demand for data transfer with increased capacity and lower latency, strengthening AWS’s global infrastructure.

Paper of The Day

The paper proposes the use of Agentic AI, an autonomous system framework integrating large AI models with planning, memory, and reasoning capabilities, for managing and optimizing 5G and future 6G Radio Access Networks (RANs). It describes a modular, scalable architecture where specialized AI agents collaboratively monitor network performance, detect anomalies, identify root causes, and execute corrective actions with minimal human intervention. By enabling real-time, intent-driven automation, this approach aims to handle the increasing complexity of modern mobile networks, improving efficiency, resilience, and adaptability in network operations.

To read the whole paper 👉️ here