- AI Report by Explainx

- Posts

- Google just casually lied to us

Google just casually lied to us

The Google Lie - diving into Multimodality, the Gemini Demo and the benchmarks shared by Google.

Last week, Google's unveiling of Gemini Ultra set the internet ablaze.

It was referred to as the next leap in AI, pitched against OpenAI's GPT-4.

The so called “AI Influencers” had their share of the day across LinkedIn, YouTube, and so on. They started to refer OpenAI’s GPT-4 as a toy, the same model they probably used to generate their piece of content.

None of them, spent 10 minutes to read Google’s documentation that was released on the model.

But some folks did. It was all a lie.

How? Let’s first understand why this was a big deal in the first place.

Multimodality.

Multimodal AI is a type of artificial intelligence that can process and understand various types of data, such as text, images, sounds, and videos. Unlike unimodal AI, which is limited to a single data type, multimodal AI utilizes multiple unimodal neural networks to extract important features from each data type.

This approach allows for a more comprehensive understanding of complex information, as it can analyze and integrate insights from diverse sources, making the AI systems more versatile and effective in real-world applications.

As of 2023, the landscape of multimodal AI models, capable of processing and understanding a variety of data types like text, images, and audio, has expanded significantly. Apart from Google's Gemini Ultra the most obvious example is

OpenAI's GPT-4, you can upload images, talk, generate audios, so you already have access to a really powerful model as it is.

Beyond this, there’s Microsoft's Kosmos-1: This is a Multimodal Large Language Model (MLLM) that has been trained on web data, including text and images, as well as image-caption pairs.

There’s also one from Meta called Imagebind (it’s not quite there yet though).

The point is, this wasn’t something new. So why was the world Freaked out?

Well the demo.

Demo is cool.

So how did Google lie to us?

Magic! Just like everything on the Internet, the video was made to “look” good. Meaning, it was aggressively edited to hide the parts where the model either failed or needed more information. How do we know that? Well Google told us, us all.

For instance, on the part where Gemini recognised “Rock Paper Scissor”; What we saw in the video was Gemini recognising stuff one shot.

What actually happened was clarified in their blog posts, and notice in the below image they mentioned “Hint: it’s a game”, this small sentence changes everything.

It tells machine to stop looking at 100% of it’s data and rather focus on x% that would contain details about games played with human hands.

Even more surprising: If you share these exact same details in GPT-4, the vision would give you the same answer. So it didn’t break the World. It just didn’t share everything with us.

Next, the benchmarks:

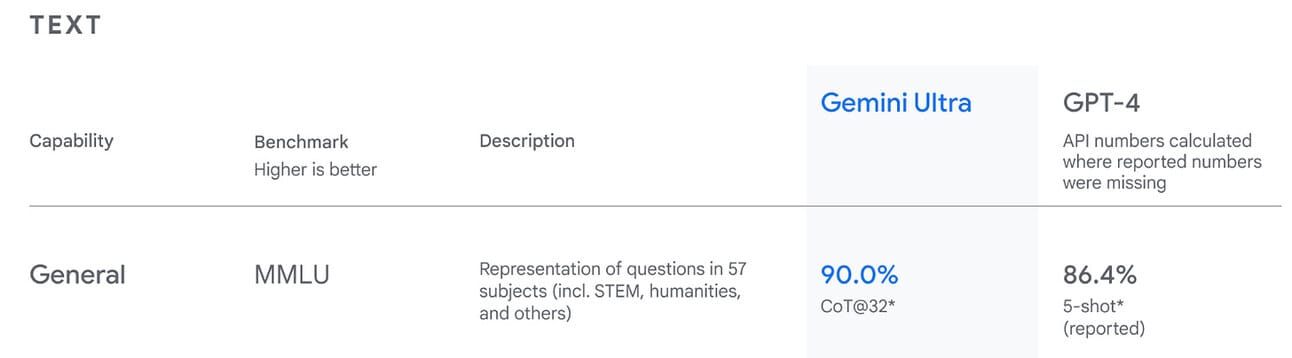

The benchmarks show Gemini Ultra has a superior model. But what isn’t directly obvious is that we’re comparing two different methods of evaluation see (CoT and 5-shot). If you compare Gemini and GPT-4 on same methods (that is 5-Shot) GPT-4 does better. However, on CoT-32 Gemini is poised to do well. As is seen below.

Why All the Fuss?

This begs the question – why all this showmanship?

It seems to be a mix of marketing genius and a demonstration of potential capabilities.

But it also raises concerns about transparency in AI advancements.

Final Thoughts

While Gemini Ultra boasts impressive benchmarks, surpassing human experts in some areas, there’s skepticism. Benchmarks, especially those not from neutral sources, can be misleading. The real test is in everyday use, where the AI’s true capabilities are put to the test.

Other AI News

Mistral AI and EU's AI Sovereignty: The European Union has finalized a new set of rules for regulating artificial intelligence, highlighting the increasing global focus on AI governance. This includes the Paris-based startup Mistral AI, which has closed a significant funding round of $415 million, despite the potential regulatory challenges.

Elon Musk's AI Chatbot 'Grok': xAI, an AI startup by Elon Musk, launched Grok, a ChatGPT competitor. This AI chatbot has started rolling out to Premium+ subscribers on X, the platform formerly known as Twitter. This marks a significant step in the competitive AI chatbot landscape.

Meta's AI-Powered Image Generator: Meta, not to be outdone by Google’s Gemini launch, rolled out a new standalone generative AI experience on the web, called "Imagine with Meta". This allows users to create images by describing them, signaling a significant move in the generative AI space.

Other AI Developments: There were several other notable developments in the AI space, including the launch of an enterprise version of DataCebo's open-source synthetic data library, the introduction of Avail’s AI summarization tool in Hollywood for script coverage, and Rhythms using AI to identify working patterns of top-performing teams for productivity enhancement.

My favourite AI Tool from this week

Chrome Co-pilot - Turned my Browser into a real-time ChatGPT.